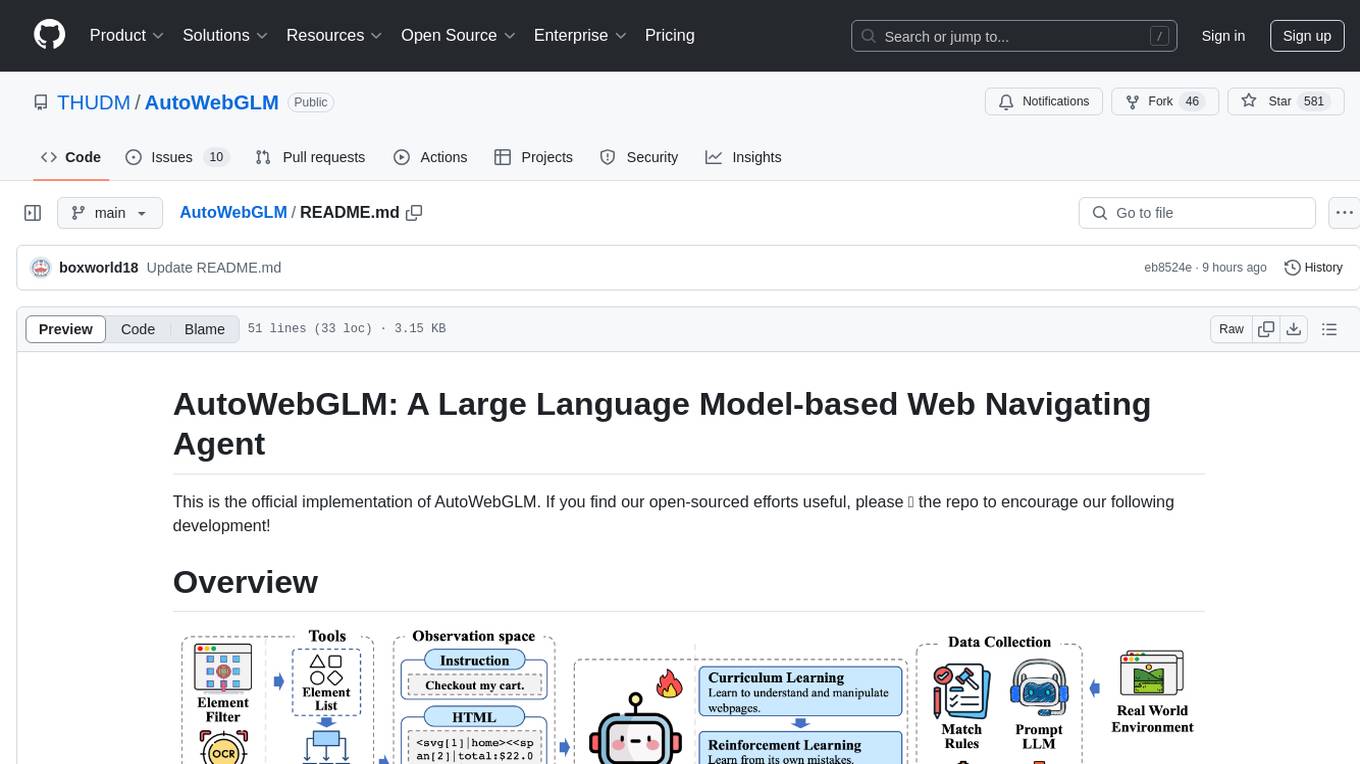

AI tools for model modification

Related Tools:

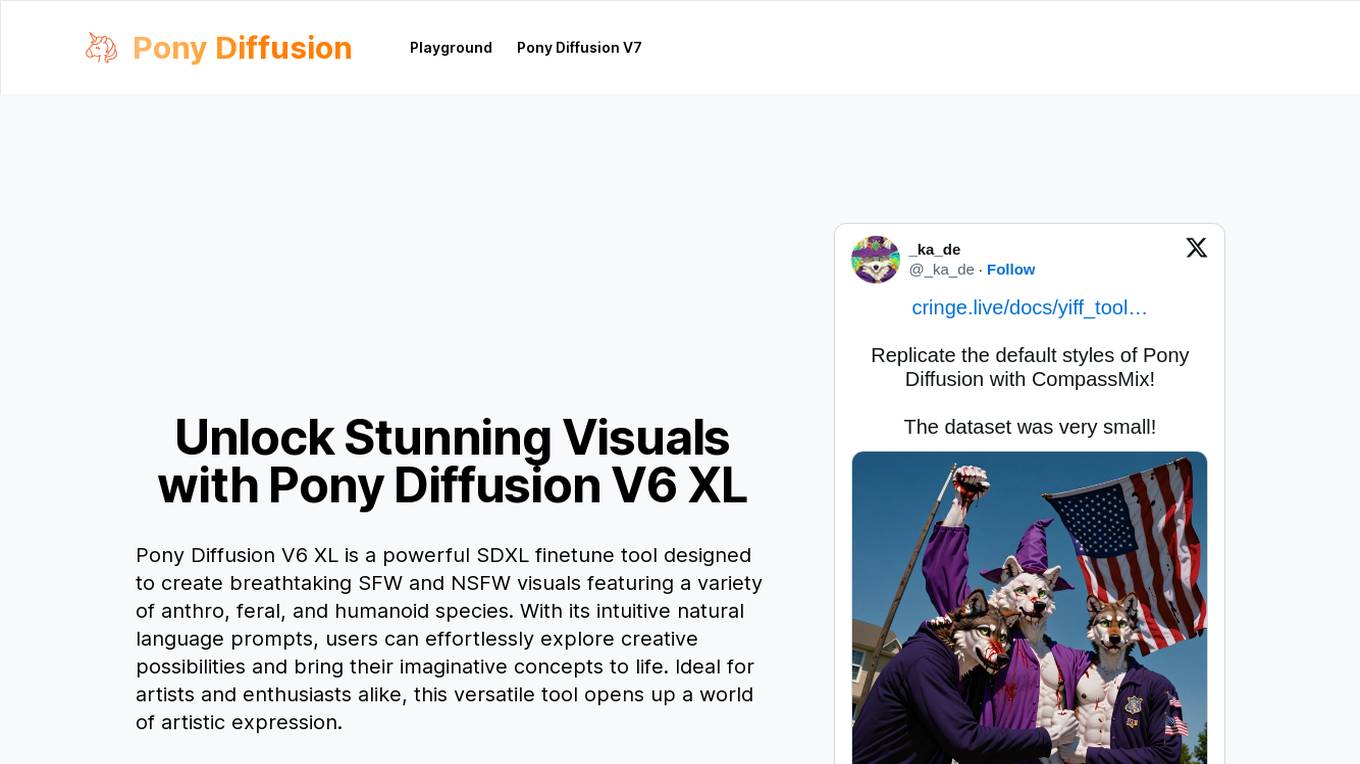

Pony Diffusion

Pony Diffusion V6 XL is an AI tool designed for creating stunning SFW and NSFW visuals featuring various species through a text-to-image generation model. It offers an intuitive interface, community engagement, and open access license, making it ideal for artists and enthusiasts to explore creative possibilities and bring imaginative concepts to life.

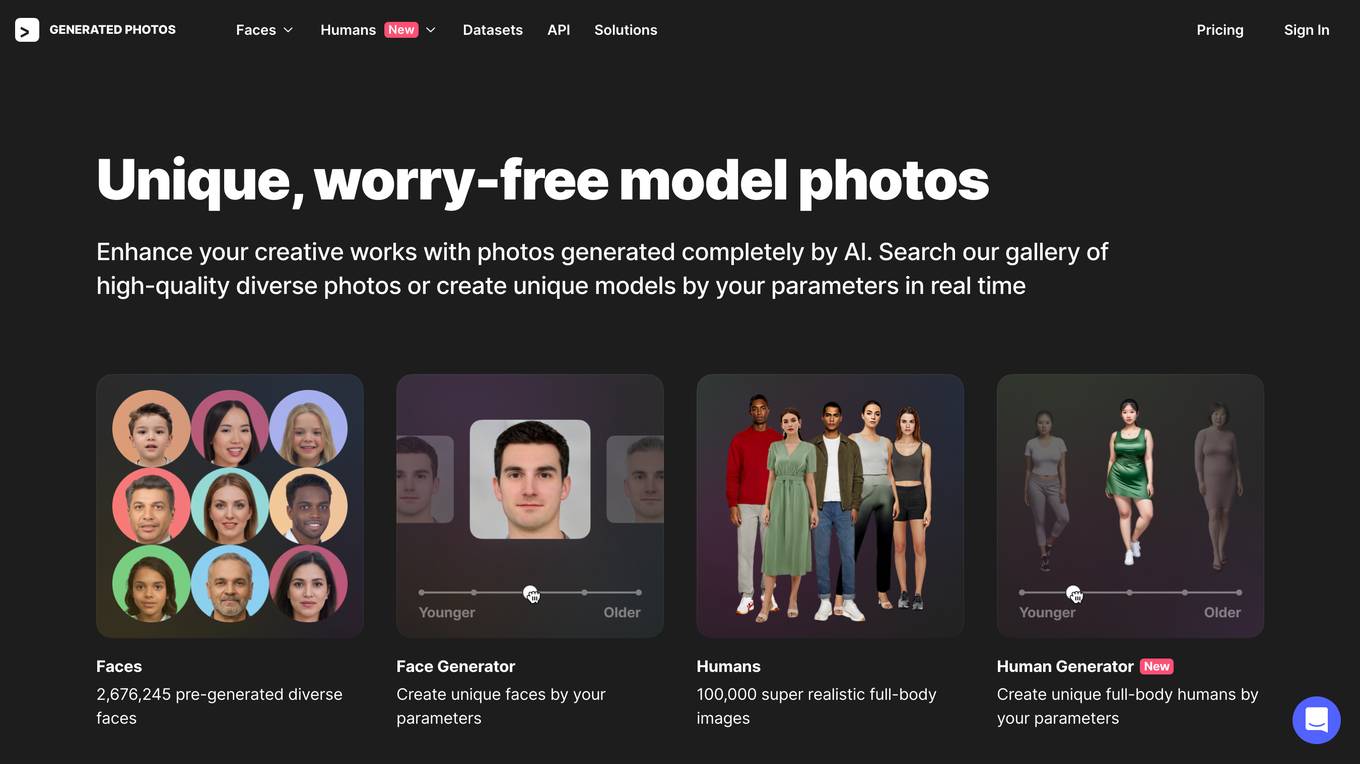

Generated Photos

Generated Photos is an AI-powered application that allows users to create unique, worry-free model photos using advanced AI-generated faces and full-body human generators. With a vast database of pre-generated diverse faces and realistic full-body images, users can enhance their creative works with high-quality photos. The application is perfect for various purposes such as ads, design, marketing, research, and machine learning. Users can search the gallery, create unique models in real-time, and even modify their own photos using the Face Generator and Human Generator tools. Generated Photos offers bulk download options, datasets, and API integration for large projects, making it a versatile tool for businesses and individuals seeking generative media solutions.

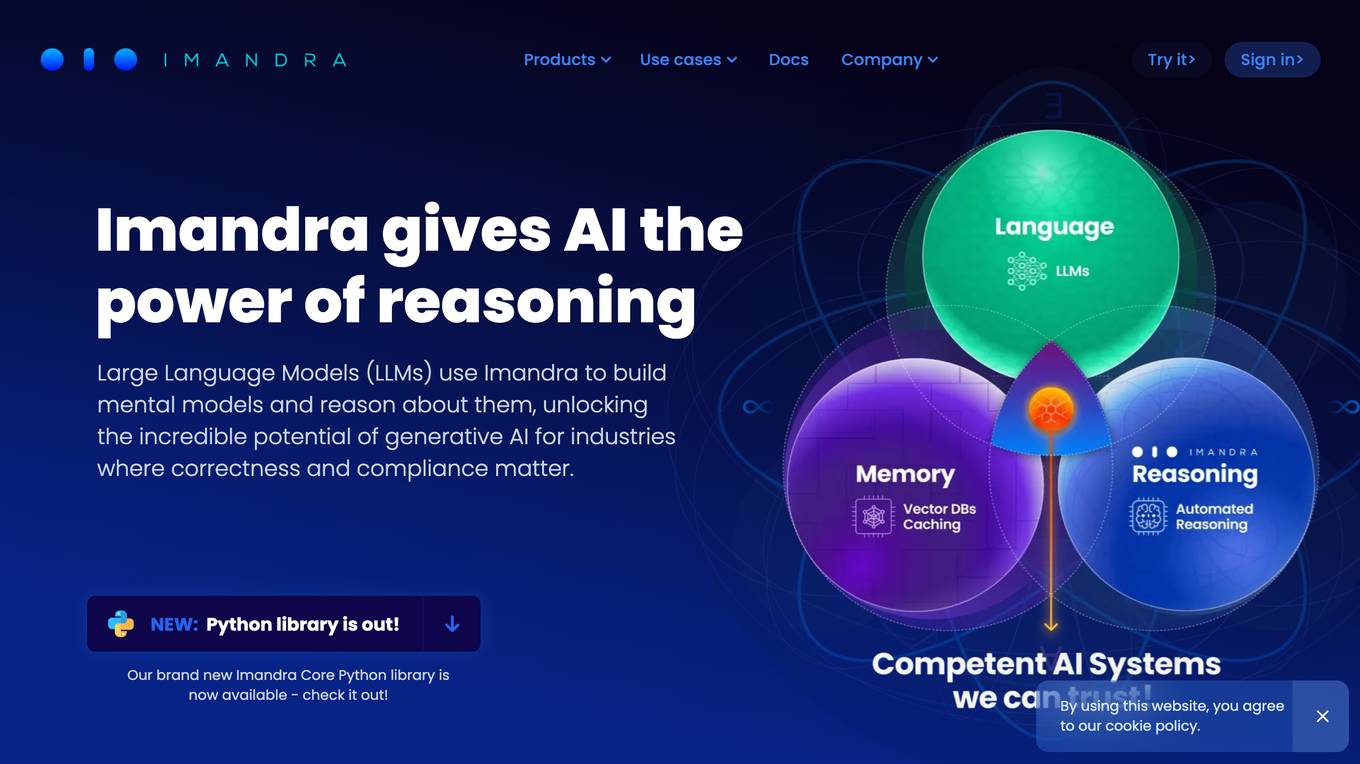

Imandra

Imandra is a company that provides automated logical reasoning for Large Language Models (LLMs). Imandra's technology allows LLMs to build mental models and reason about them, unlocking the potential of generative AI for industries where correctness and compliance matter. Imandra's platform is used by leading financial firms, the US Air Force, and DARPA.

Google Gemma

Google Gemma is a lightweight, state-of-the-art open language model (LLM) developed by Google. It is part of the same research used in the creation of Google's Gemini models. Gemma models come in two sizes, the 2B and 7B parameter versions, where each has a base (pre-trained) and instruction-tuned modifications. Gemma models are designed to be cross-device compatible and optimized for Google Cloud and NVIDIA GPUs. They are also accessible through Kaggle, Hugging Face, Google Cloud with Vertex AI or GKE. Gemma models can be used for a variety of applications, including text generation, summarization, RAG, and both commercial and research use.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

Fooocus

Fooocus is a cutting-edge AI-powered image generation and editing platform that empowers users to bring their creative visions to life. With advanced features like unique inpainting algorithms, image prompt enhancements, and versatile model support, Fooocus stands out as a leading platform in creative AI technology. Users can leverage Fooocus's capabilities to generate stunning images, edit and refine them with precision, and collaborate with others to explore new creative horizons.

Nano Banana

Nano Banana is a revolutionary AI-powered image generation and editing platform that offers advanced AI technology to transform creative ideas into stunning images. Unlike traditional text-to-image models, Nano Banana combines text and image inputs for seamless visual concept extraction and modification, delivering consistent character editing and scene preservation. With features like in-context image generation, character consistency, and local editing precision, Nano Banana provides a superior performance that surpasses competing models. Users can experience next-generation AI image editing with Nano Banana's innovative technology, making it a valuable tool for professional creatives and content professionals.

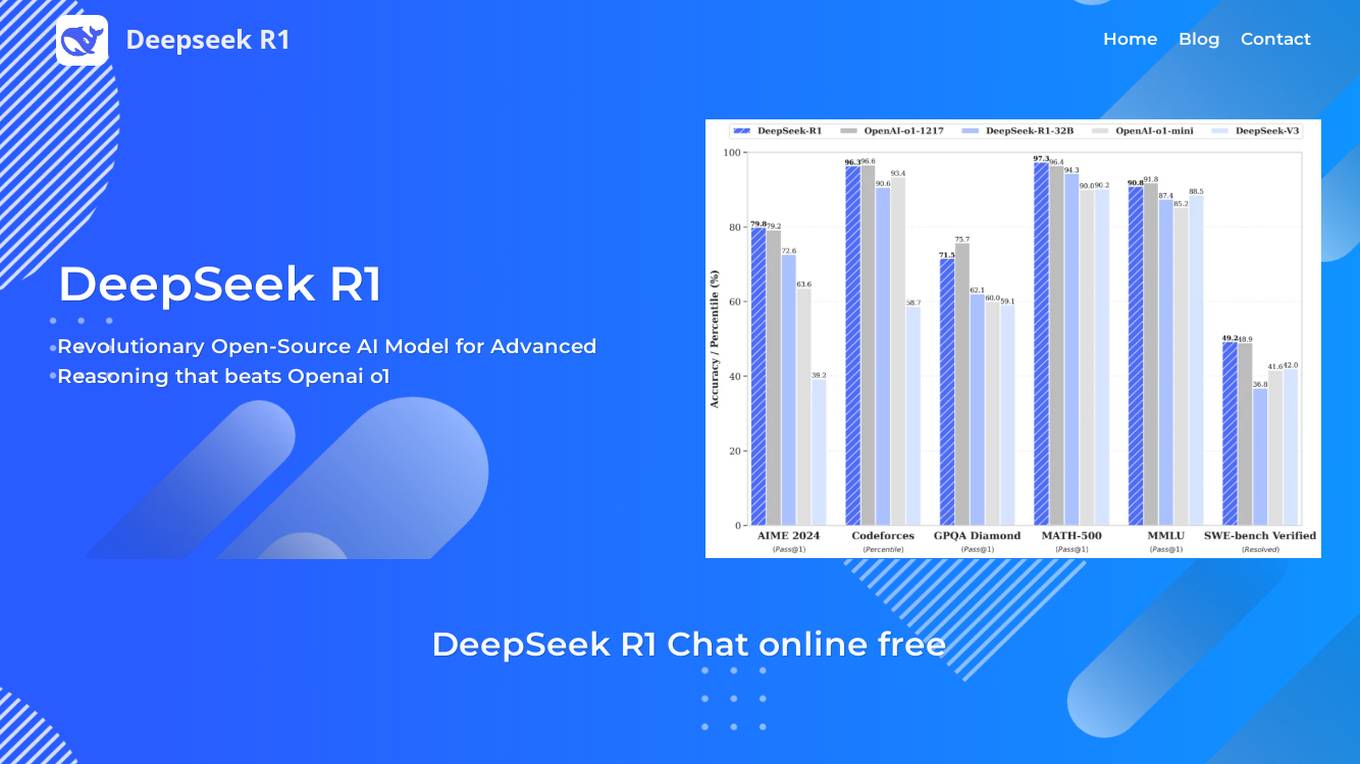

DeepSeek R1

DeepSeek R1 is a revolutionary open-source AI model for advanced reasoning that outperforms leading AI models in mathematics, coding, and general reasoning tasks. It utilizes a sophisticated MoE architecture with 37B active/671B total parameters and 128K context length, incorporating advanced reinforcement learning techniques. DeepSeek R1 offers multiple variants and distilled models optimized for complex problem-solving, multilingual understanding, and production-grade code generation. It provides cost-effective pricing compared to competitors like OpenAI o1, making it an attractive choice for developers and enterprises.

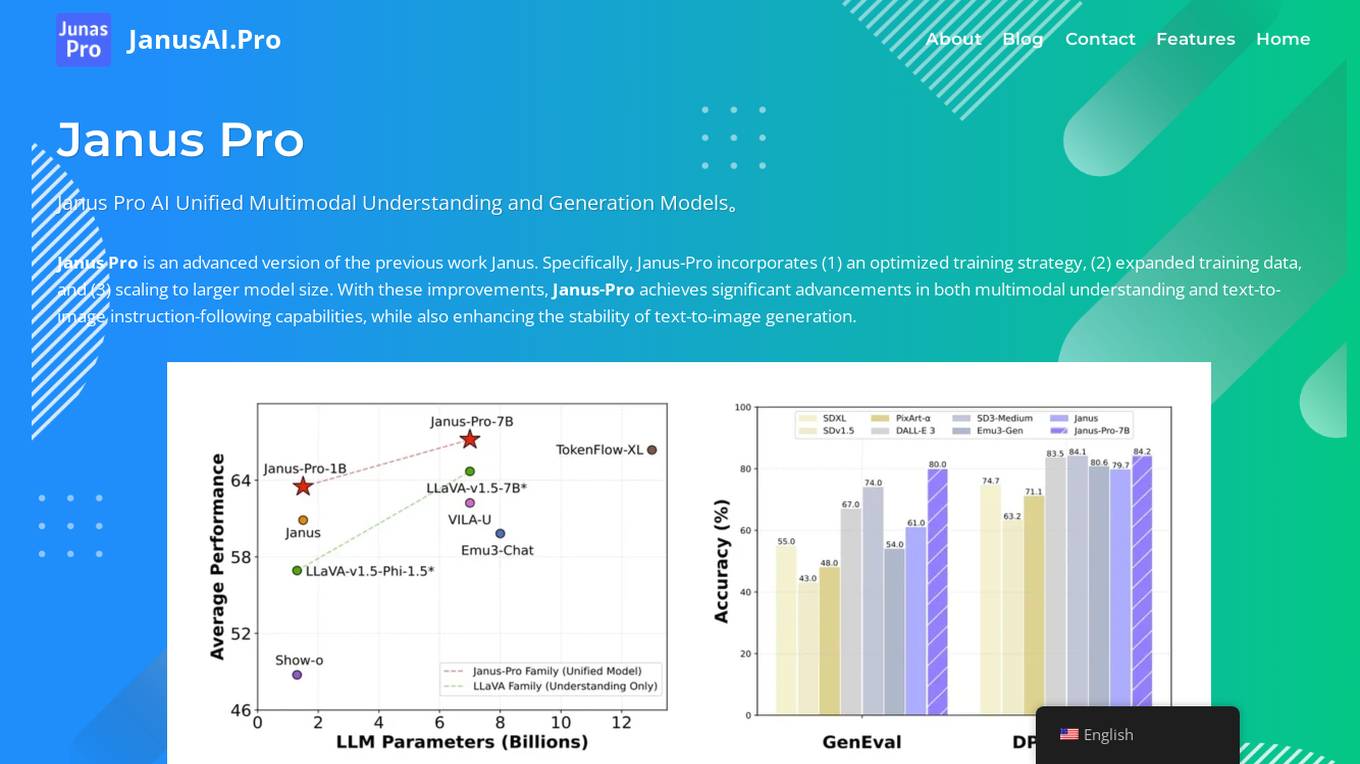

Janus Pro AI

Janus Pro AI is an advanced unified multimodal AI model that combines image understanding and generation capabilities. It incorporates optimized training strategies, expanded training data, and larger model scaling to achieve significant advancements in both multimodal understanding and text-to-image generation tasks. Janus Pro features a decoupled visual encoding system, outperforming leading models like DALL-E 3 and Stable Diffusion in benchmark tests. It offers open-source compatibility, vision processing specifications, cost-effective scalability, and an optimized training framework.

Sora AI Tech

Sora AI Tech is an advanced diffusion model capable of generating videos. It starts with a video that looks like static noise and gradually transforms it by removing the noise over many steps to produce a clear video. Sora can generate entire videos at once or extend the length of videos, catering to a wide range of video production needs.

Snapmark

Snapmark is a visual UI development tool that helps AI precisely understand user interface modification intent. By selecting page elements directly, Snapmark delivers accurate DOM information to AI, enabling it to generate code that meets expectations. The tool seamlessly integrates with mainstream browsers, IDEs, and AI models to ensure precise code generation. Snapmark leverages advanced AI models like OpenAI's GPT-4 and GPT-3.5, as well as Anthropic's Claude models, for high-quality code generation. It specializes in optimizing code for Next.js framework and Tailwind CSS utility classes, providing a seamless development experience for users.

Poker Bot AI+

Poker Bot AI+ is an advanced poker AI application that offers fully automated poker bots powered by neural networks and machine learning. The application provides a suite of products to enhance poker gameplay, including automated online poker bots, AI advisor PokerX, Poker Ecology service, poker skill development with AI-guided tips, and Android-based poker farms on emulators. It supports various poker games and rooms, ensuring optimal decision-making for players. The software guarantees secure gameplay by emulating human behavior and safeguarding user identity. Before purchasing, the effectiveness of the poker bot is demonstrated privately. Poker Bot AI+ aims to revolutionize the poker industry with cutting-edge AI technology.

Moonbeam

Moonbeam is an AI-powered writing assistant that helps users generate long-form content, such as essays, stories, articles, and blog posts. It offers a variety of features to help users write better content, including a smart chat feature that provides real-time feedback, a content cluster generator that helps users create comprehensive content clusters around a single topic, and a custom style generator that allows users to write in the style of famous authors or celebrities. Moonbeam also offers a collaboration mode that allows users to work together on documents in real-time.

Seabiscuit Business Model Master

Discover A More Robust Business: Craft tailored value proposition statements, develop a comprehensive business model canvas, conduct detailed PESTLE analysis, and gain strategic insights on enhancing business model elements like scalability, cost structure, and market competition strategies. (v1.18)

Business Model Canvas Strategist

Business Model Canvas Creator - Build and evaluate your business model

Business Model Canvas Wizard

Un aiuto a costruire il Business Model Canvas della tua iniziativa

1970s Beauties

Feeling nostalgic? Recreate model images of gorgeous women from the 1970s. Just describe the setting, and a beautiful '70s woman will appear.

1950s Beauties

Feeling nostalgic? Recreate model images of gorgeous women from the 1950s. Just describe the setting, and a beautiful '50s woman will appear.

Business Model Advisor

Business model expert, create detailed reports based on business ideas.

Prophet Optimizer

Prophet model expert, professional yet approachable, seeks clarification

AI Model NFT Marketplace- Joy Marketplace

Expert on AI Model NFT Marketplace, offering insights on blockchain tech and NFTs.

Modelos de Negocios GPT

Guía paso a paso para la creación y mejora de modelos de negocio usando la metodología Business Model Canvas.

ArchitectAI

A custom GPT model designed to assist in developing personalized software design solutions.

HVAC Apex

Benchmark HVAC GPT model with unmatched expertise and forward-thinking solutions, powered by OpenAI

Discrete Mathematics

Precision-focused Language Model for Discrete Mathematics, ensuring unmatched accuracy and error avoidance.

awesome-MLSecOps

Awesome MLSecOps is a curated list of open-source tools, resources, and tutorials for MLSecOps (Machine Learning Security Operations). It includes a wide range of security tools and libraries for protecting machine learning models against adversarial attacks, as well as resources for AI security, data anonymization, model security, and more. The repository aims to provide a comprehensive collection of tools and information to help users secure their machine learning systems and infrastructure.

abliteration

Abliteration is a tool that allows users to create abliterated models using transformers quickly and easily. It is not a tool for uncensorship, but rather for making models that will not explicitly refuse users. Users can clone the repository, install dependencies, and make abliterations using the provided commands. The tool supports adjusting parameters for stubborn models and offers various options for customization. Abliteration can be used for creating modified models for specific tasks or topics.

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

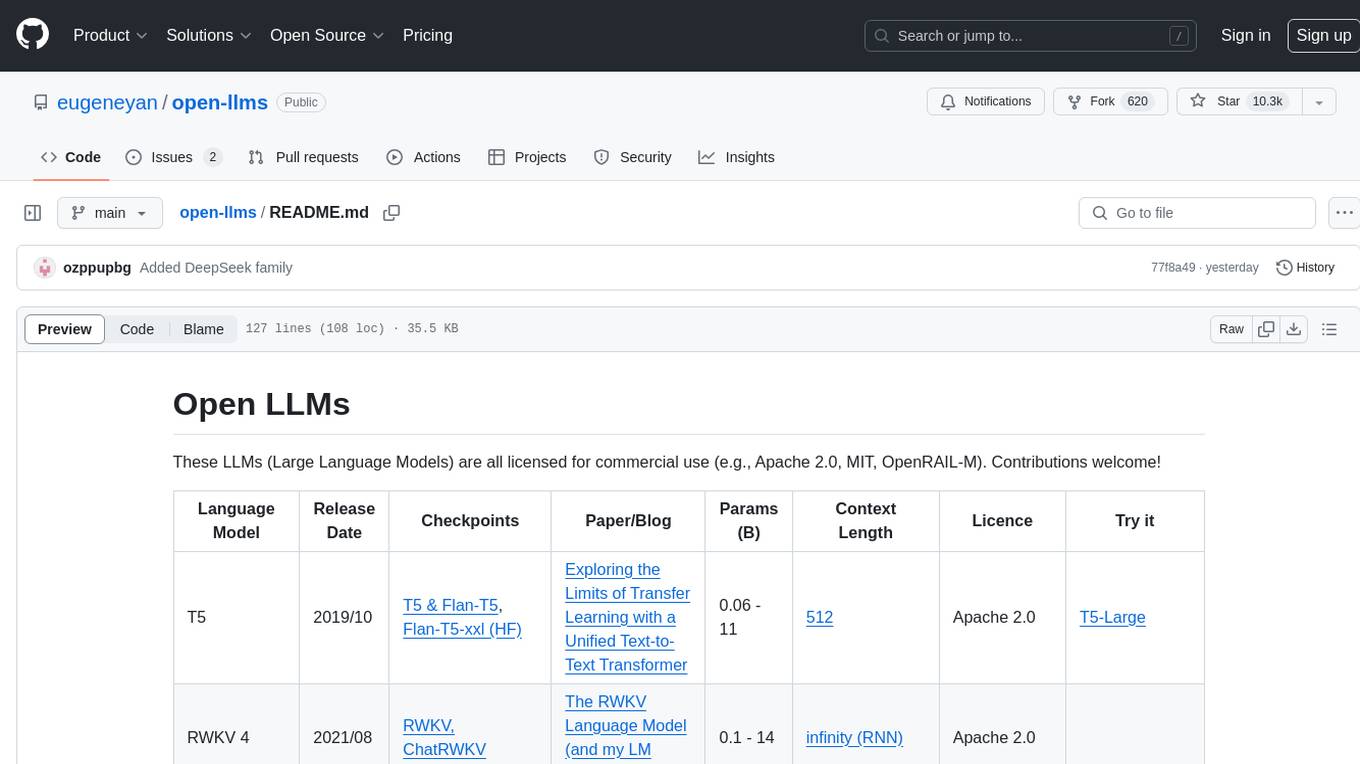

open-llms

Open LLMs is a repository containing various Large Language Models licensed for commercial use. It includes models like T5, GPT-NeoX, UL2, Bloom, Cerebras-GPT, Pythia, Dolly, and more. These models are designed for tasks such as transfer learning, language understanding, chatbot development, code generation, and more. The repository provides information on release dates, checkpoints, papers/blogs, parameters, context length, and licenses for each model. Contributions to the repository are welcome, and it serves as a resource for exploring the capabilities of different language models.

tt-forge

TT-Forge is Tenstorrent's MLIR-based compiler that integrates into various compiler technologies from AI/ML frameworks to enable running models and custom kernel generation. It aims to provide abstraction of different frontend frameworks, compile various model architectures with good performance, and abstract all Tenstorrent device architectures. The repository serves as the central hub for the tt-forge compiler project, bringing together sub-projects into a cohesive product with releases, demos, model support, roadmaps, and key resources. Users can explore the documentation for individual front ends to get started running tests and demos.

Consistency_LLM

Consistency Large Language Models (CLLMs) is a family of efficient parallel decoders that reduce inference latency by efficiently decoding multiple tokens in parallel. The models are trained to perform efficient Jacobi decoding, mapping any randomly initialized token sequence to the same result as auto-regressive decoding in as few steps as possible. CLLMs have shown significant improvements in generation speed on various tasks, achieving up to 3.4 times faster generation. The tool provides a seamless integration with other techniques for efficient Large Language Model (LLM) inference, without the need for draft models or architectural modifications.

Model-References

The 'Model-References' repository contains examples for training and inference using Intel Gaudi AI Accelerator. It includes models for computer vision, natural language processing, audio, generative models, MLPerf™ training, and MLPerf™ inference. The repository provides performance data and model validation information for various frameworks like PyTorch. Users can find examples of popular models like ResNet, BERT, and Stable Diffusion optimized for Intel Gaudi AI accelerator.

Large-Language-Model-Notebooks-Course

This practical free hands-on course focuses on Large Language models and their applications, providing a hands-on experience using models from OpenAI and the Hugging Face library. The course is divided into three major sections: Techniques and Libraries, Projects, and Enterprise Solutions. It covers topics such as Chatbots, Code Generation, Vector databases, LangChain, Fine Tuning, PEFT Fine Tuning, Soft Prompt tuning, LoRA, QLoRA, Evaluate Models, Knowledge Distillation, and more. Each section contains chapters with lessons supported by notebooks and articles. The course aims to help users build projects and explore enterprise solutions using Large Language Models.

git-mcp

GitMCP is a free, open-source service that transforms any GitHub project into a remote Model Context Protocol (MCP) endpoint, allowing AI assistants to access project documentation effortlessly. It empowers AI with semantic search capabilities, requires zero setup, is completely free and private, and serves as a bridge between GitHub repositories and AI assistants.

gollama

Gollama is a tool designed for managing Ollama models through a Text User Interface (TUI). Users can list, inspect, delete, copy, and push Ollama models, as well as link them to LM Studio. The application offers interactive model selection, sorting by various criteria, and actions using hotkeys. It provides features like sorting and filtering capabilities, displaying model metadata, model linking, copying, pushing, and more. Gollama aims to be user-friendly and useful for managing models, especially for cleaning up old models.

mcpd

mcpd is a tool developed by Mozilla AI to declaratively manage Model Context Protocol (MCP) servers, enabling consistent interface for defining and running tools across different environments. It bridges the gap between local development and enterprise deployment by providing secure secrets management, declarative configuration, and seamless environment promotion. mcpd simplifies the developer experience by offering zero-config tool setup, language-agnostic tooling, version-controlled configuration files, enterprise-ready secrets management, and smooth transition from local to production environments.

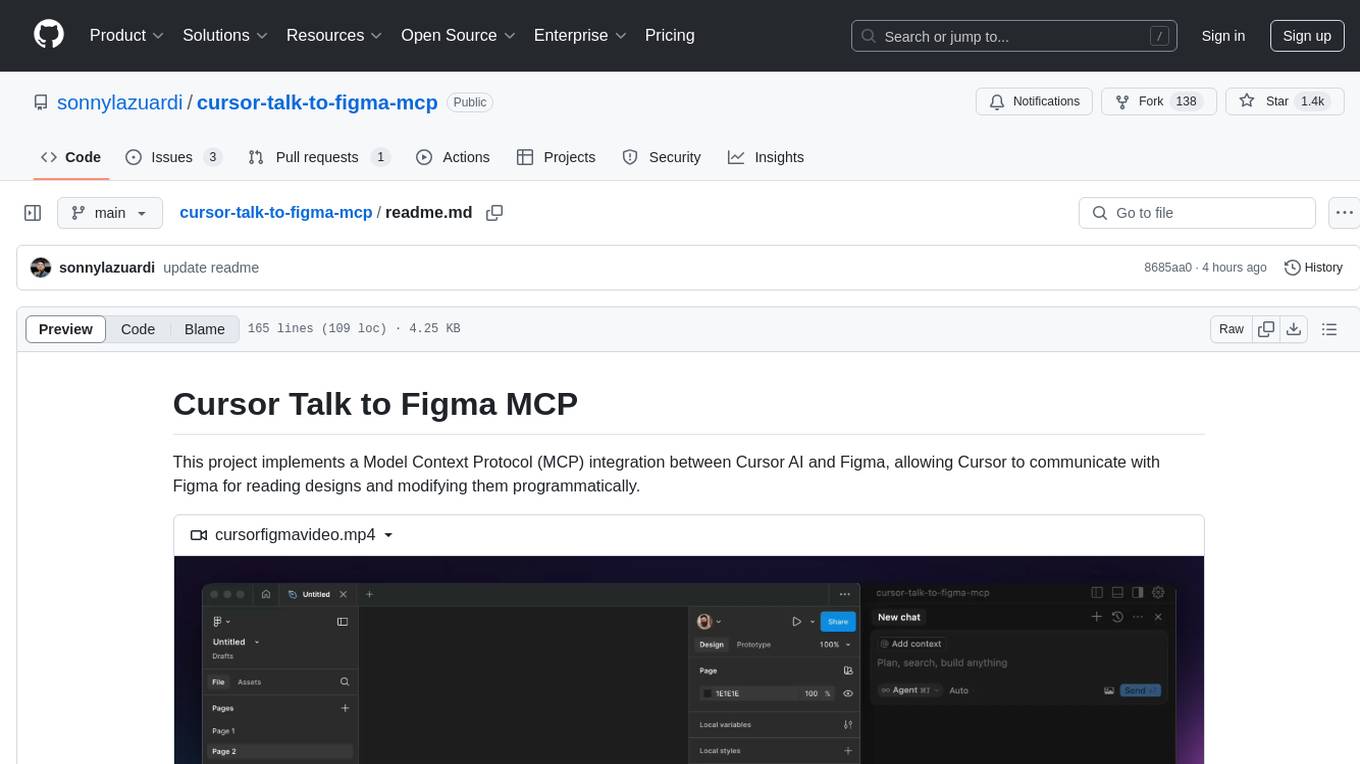

cursor-talk-to-figma-mcp

This project implements a Model Context Protocol (MCP) integration between Cursor AI and Figma, allowing Cursor to communicate with Figma for reading designs and modifying them programmatically. It provides tools for interacting with Figma such as creating elements, modifying text content, styling, layout & organization, components & styles, export & advanced features, and connection management. The project structure includes a TypeScript MCP server for Figma integration, a Figma plugin for communicating with Cursor, and a WebSocket server for facilitating communication between the MCP server and Figma plugin.

awesome-mcp-servers

Awesome MCP Servers is a curated list of Model Context Protocol (MCP) servers that enable AI models to securely interact with local and remote resources through standardized server implementations. The list includes production-ready and experimental servers that extend AI capabilities through file access, database connections, API integrations, and other contextual services.

ai-clone-whatsapp

This repository provides a tool to create an AI chatbot clone of yourself using your WhatsApp chats as training data. It utilizes the Torchtune library for finetuning and inference. The code includes preprocessing of WhatsApp chats, finetuning models, and chatting with the AI clone via a command-line interface. Supported models are Llama3-8B-Instruct and Mistral-7B-Instruct-v0.2. Hardware requirements include approximately 16 GB vRAM for QLoRa Llama3 finetuning with a 4k context length. The repository addresses common issues like adjusting parameters for training and preprocessing non-English chats.

aligner

Aligner is a model-agnostic alignment tool that learns correctional residuals between preferred and dispreferred answers using a small model. It can be directly applied to various open-source and API-based models with only one-off training, suitable for rapid iteration and improving model performance. Aligner has shown significant improvements in helpfulness, harmlessness, and honesty dimensions across different large language models.

1.5-Pints

1.5-Pints is a repository that provides a recipe to pre-train models in 9 days, aiming to create AI assistants comparable to Apple OpenELM and Microsoft Phi. It includes model architecture, training scripts, and utilities for 1.5-Pints and 0.12-Pint developed by Pints.AI. The initiative encourages replication, experimentation, and open-source development of Pint by sharing the model's codebase and architecture. The repository offers installation instructions, dataset preparation scripts, model training guidelines, and tools for model evaluation and usage. Users can also find information on finetuning models, converting lit models to HuggingFace models, and running Direct Preference Optimization (DPO) post-finetuning. Additionally, the repository includes tests to ensure code modifications do not disrupt the existing functionality.

AwesomeResponsibleAI

Awesome Responsible AI is a curated list of academic research, books, code of ethics, courses, data sets, frameworks, institutes, newsletters, principles, podcasts, reports, tools, regulations, and standards related to Responsible, Trustworthy, and Human-Centered AI. It covers various concepts such as Responsible AI, Trustworthy AI, Human-Centered AI, Responsible AI frameworks, AI Governance, and more. The repository provides a comprehensive collection of resources for individuals interested in ethical, transparent, and accountable AI development and deployment.